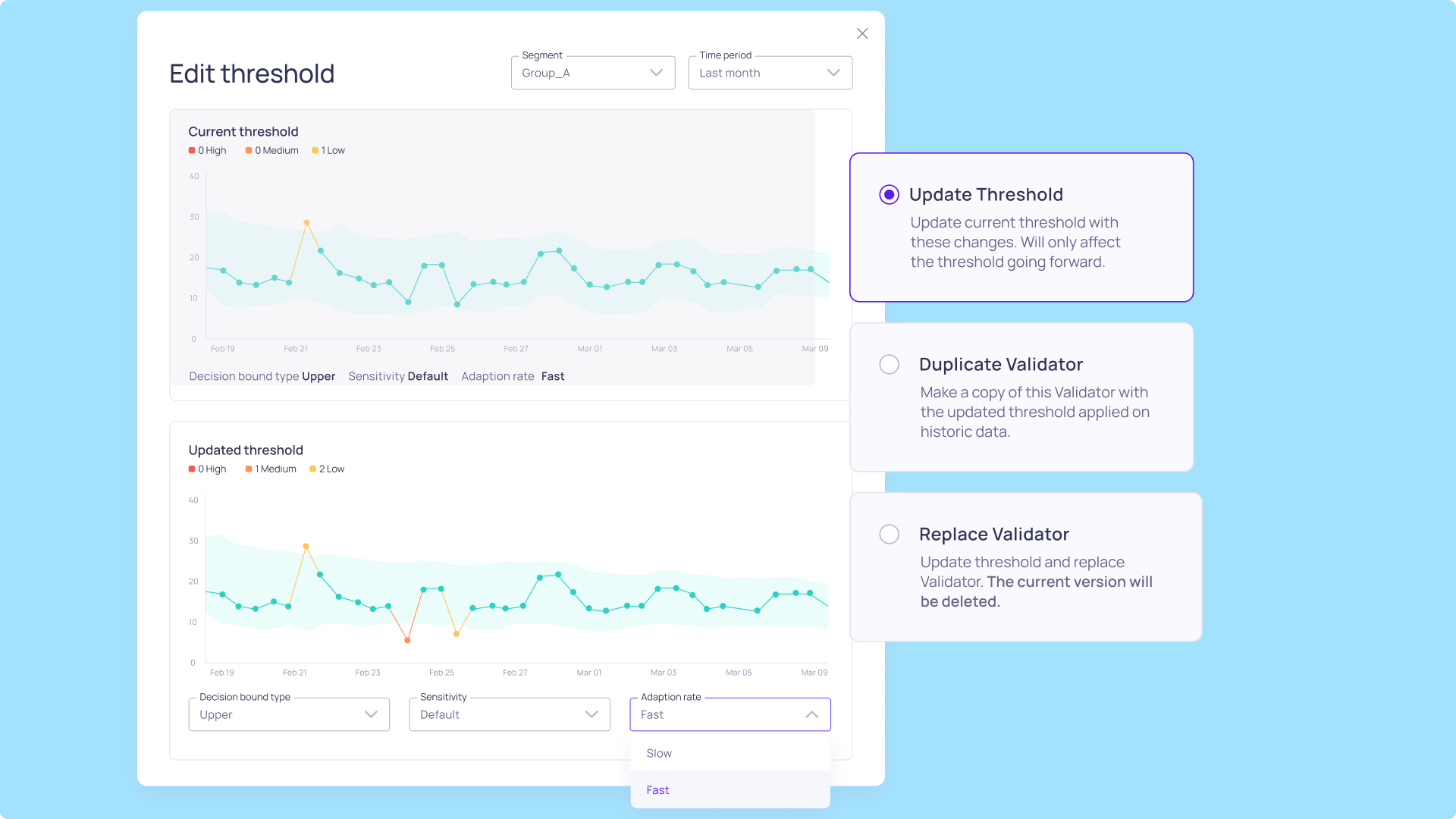

Getting the most out of automated anomaly detection often means fine-tuning how the system learns from your data. While Validio excels at automatically identifying outliers, understanding the nuances of your data's behavior allows for even more precise monitoring. That's why we've introduced the Adaption Rate setting for our Dynamic Thresholds, giving you greater control over how our anomaly detection bounds respond to changes in your data.

🚩 TABLE OF CONTENTS

→ What are dynamic thresholds?

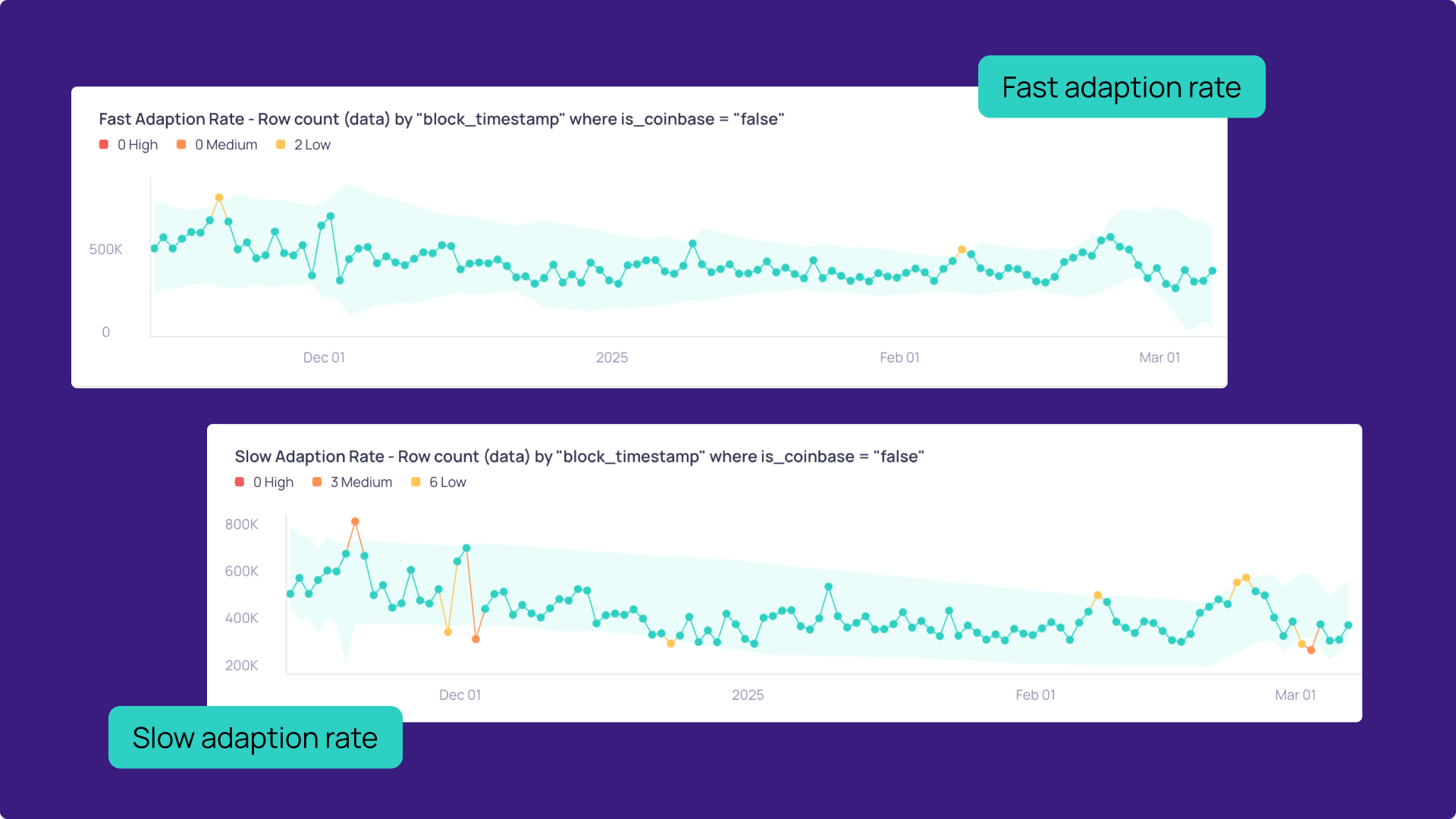

→ Understanding adaption rates: fast vs slow